Build Your First AI Agent

In under 200 lines of Python, I built an agentic AI mentor that teaches, evaluates, and adapts. Here's how you can do it too.

ChatGPT. Gemini. Claude. Or any LLM. Ask a question and you get a polished answer in seconds.

Impressive? Sure. Transformative? Not really. Answers alone do not drive change.

Now imagine this: An AI that keeps the conversation alive. It asks clarifying questions. It remembers last week’s blockers. It nudges you toward the next step. That’s not a chatbot. That’s an AI agent.

In this article, I’ll show you exactly what Agentic AI is, how it works, and how you can build your own agent from scratch, fast.

What Is Agentic AI?

Agentic AI is artificial intelligence that acts on its own. It can make decisions, adapt to changes, and pursue goals with minimal human input. Instead of just responding to commands, it takes initiative, solves problems, and learns from experience.

Think of it as AI with agency. Traditional chatbots wait for prompts. An agent, by contrast, can observe a situation, set its own internal goals, and take steps toward those goals without needing to be micromanaged.

Put simply, Agentic AI is autonomy with purpose. It can:

Act with intention rather than wait passively for user input

Perceive and adapt by asking follow-up questions, clarifying vague instructions, or suggesting next steps

Problem-solve within boundaries and tools you define, choosing its own tactics

Persist through loops until it considers the task complete

Here’s a mental shortcut:

Autonomy tells the AI it may act.

Agency tells the AI it should act toward a clear objective.

Contrast that with a standard LLM exchange:

User: “I want to be a better delegator.”

ChatGPT: “Here are five tips.”

An AI agent digs deeper:

Agent: “What part of delegation feels hardest right now?”

User: “I worry tasks won’t meet my standards.”

Agent: “Describe a recent example when this worry became reality.”

(The loop continues until a concrete plan emerges.)

Most LLMs respond once and stop. Agents don’t stop until the job is done.

The Agent Loop

The secret sauce that turns a one‑off chatbot into an autonomous helper is a simple, repeatable cycle known as the agent loop. In practice each turn of the loop covers six steps:

Construct the prompt: Combine the current goal, any tool descriptions, and recent feedback into a single prompt string.

Query the LLM: Send the prompt to your model of choice and receive a structured response.

Parse the response: Extract the action in a machine‑readable form: for example an API name plus arguments.

Execute the action: Call the API or any other tool, run code, or perform whatever real‑world step the agent selected.

Capture feedback: Get the results from the action taken, which could include success messages, error codes, or data.

Decide to continue or end: Based on the feedback, determine whether to continue the loop (a new prompt) or to end the process.

A few practical tips:

Give the agent clear tool choices: (“You may call clarify, teach, evaluate, or finish”). Clear options make parsing trivial.

Store only what matters: Memory grows every loop; trim or summarise older steps to keep token costs low.

Stay in charge: Your outer loop controls when to break, throttle, or sandbox actions. The agent suggests; your code decides.

Master this pattern and you can bolt an LLM onto almost any workflow, from spreadsheet updates to expense processing to leadership coaching.

Prompts and Memory

To turn a language model into an agent, you need to automate two things:

1. Prompting programmatically: Instead of a person typing questions and reading answers, your code handles the conversation loop. It builds prompts from context, sends them to the model, parses responses, and decides what to do next.

2. Managing memory explicitly: Language models don’t remember past calls. You must give them memory by resending important context each turn, and deciding what’s worth including.

Prompting in Practice

An agent's prompt is more than a static instruction. It's a growing conversation that evolves with every turn. Each message is labeled by a role to guide how the model understands and responds:

system: Sets the rules for the AI. It defines the model's behavior, tone, and boundaries. You use it to tell the AI how it should act.

user: Represents everything the human says. This includes questions, goals, clarifications, and answers.

assistant: Captures what the AI said in the previous message. It keeps the conversation coherent and helps the model stay consistent.

These roles form the structure of the agent's memory. They allow the AI to track context, follow up intelligently, and make informed decisions with each interaction.

Here’s an example:

<system>

You are a leadership mentor.

<user>

I want to be a better delegator.

<assistant>

What part of delegation feels hardest right now?

<user>

I worry tasks won’t meet my standards.Each step adds to the thread. Your agent appends a user message, calls the model, then appends the assistant’s reply, and repeats.

To keep this loop working:

Keep prompts concise and structured.

Use consistent message roles (system, user, assistant).

Trim the history when it gets too long.

Managing Memory

Most agents rely on two types of memory:

Conversation memory holds the recent message history. This is what you resend to the model on each turn to maintain context and continuity.

Code-level state stores important internal values the model doesn’t need to see. This might include things like raw API responses, counters, or scores.

A typical way to maintain conversation memory looks like this:

self.memory.append({"role": "assistant", "content": response_content})

full_prompt.extend(self.memory)This pattern keeps the prompt grounded in the full interaction history, allowing the agent to make decisions based on everything said so far.

Building the Mentor Agent in Python

I’ve been tinkering with AI agents lately, and I wanted to show you how to build one from scratch using Python, LiteLLM, and Google Colab.

Why These Tools?

Google Colab: Zero setup. Just open a notebook and run. Perfect for sharing experiments or workshops.

LiteLLM: A clean wrapper that lets you talk to GPT-4o (or other models) with minimal boilerplate. Great for building agents quickly.

Python: The lingua franca of AI. Enough said.

Let’s walk through the setup.

Step 1: Set Up Your Environment

Let’s start with the basics, installing the right library and loading your API key securely:

!pip install litellm --quiet

import os

import json

from litellm import completion

from google.colab import userdataIn this project, I’m using OpenAI’s GPT-4o via API. But thanks to LiteLLM, you can swap in other providers like Anthropic, Google Gemini, Mistral, or even local models.

To follow along with OpenAI, get your API key from platform.openai.com and securely store it in Colab.

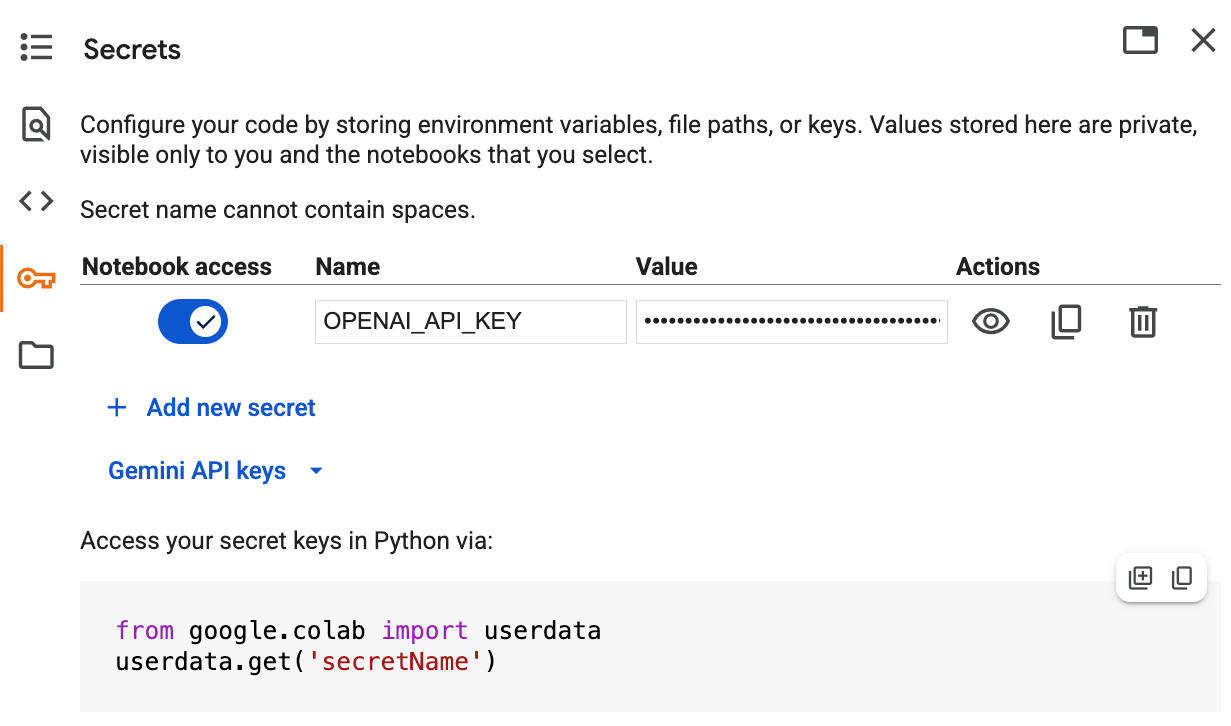

To securely store your API keys in Google Colab, use the Secrets tab in the left sidebar. This allows you to save sensitive values like API keys without hardcoding them into your notebook.

Once stored, you can access them in code like this:

api_key = userdata.get("OPENAI_API_KEY")

os.environ["OPENAI_API_KEY"] = api_keyThis keeps your secrets safe, avoids exposing them in shared notebooks, and works seamlessly across sessions. No need to call set() in the notebook, just manage them through the UI.

Step 2: Configure the Agent (Role, Memory, Tools)

We’re not building a chatbot or a coder. We’re building a mentor. That means we need to be explicit about how it should behave.

In this step, we define the agent’s goal, initialize its memory, register its tools and, most importantly, set its role as a leadership mentor. This gives the model clear boundaries and purpose from the very start.

class LeadershipMentorAgent:

"""

A simple AI Agent that acts as a leadership mentor.

"""

def __init__(self, goal):

"""

Initializes the agent with a goal, memory, and a set of tools.

"""

self.goal = goal

self.memory = []

self.max_loops = 10 # Set a maximum number of interactions

# A registry of available "mentoring" tools for the agent.

self.tools = {

"clarify": {

"function": self.clarify,

"description": "Asks the user a question to get more details about their goal. Use this if the goal is vague.",

"args": {"question": "The clarifying question to ask the user (string)."}

},

"teach": {

"function": self.teach,

"description": "Explains a leadership concept, theory, or strategy to the user. Use this to provide knowledge.",

"args": {"concept": "The concept to explain (string)."}

},

"evaluate": {

"function": self.evaluate,

"description": "Asks the user a question to see if they understood a concept. Use this to check for comprehension.",

"args": {"question": "The question to evaluate understanding (string)."}

},

"finish": {

"function": self.finish,

"description": "Ends the mentoring session with a summary or concluding remarks.",

"args": {"summary": "A final message for the user (string)."}

}

}

# These functions define the agent's "actions". They interact with the user via the console.

def clarify(self, question: str) -> str:

"""Asks the user for more information."""

print(f"🤖 Mentor: {question}")

user_response = input("You: ")

return f"User's response: '{user_response}'"

def teach(self, concept: str) -> str:

"""Explains a concept to the user."""

# In a real app, you might fetch this from a knowledge base.

print(f"🤖 Mentor: Let's talk about '{concept}'. A key aspect of this is...")

# Mock explanation

if "feedback" in concept.lower():

print("Effective feedback is specific, actionable, and delivered with empathy. It focuses on behavior, not personality.")

else:

print("It's important for a leader to understand this principle to guide their team effectively.")

return "Concept explained."

def evaluate(self, question: str) -> str:

"""Checks if the user understood the concept."""

print(f"🤖 Mentor: {question}")

user_response = input("You: ")

return f"User's response to evaluation: '{user_response}'"

def finish(self, summary: str) -> str:

"""Ends the conversation."""

print(f"🤖 Mentor: {summary}")

return "Session concluded."

def _construct_prompt(self) -> list[dict]:

"""

Constructs the prompt for the LLM with instructions, tools, and history.

"""

# The system prompt defines the agent's persona and logic.

system_prompt = f"""

You are an AI Leadership Mentor. Your purpose is to help the user with their goal: "{self.goal}".

You will guide them by asking questions, teaching concepts, and evaluating their understanding.

You have access to the following tools:

{json.dumps(self.tools, indent=2, default=lambda o: o.__doc__)}

Follow this thought process:

1. Start by understanding the user's goal. If it's unclear, use 'clarify'.

2. Based on their goal, use 'teach' to explain a relevant leadership skill.

3. After teaching, use 'evaluate' to check if they grasped the concept.

4. Based on their responses, decide the next step. Either teach a new concept or continue clarifying.

5. When the user's initial goal has been addressed, use 'finish' to end the conversation.

6. Your responses MUST be in a valid JSON format.

Example of a tool-using response:

{{

"action": "teach",

"args": {{"concept": "Giving constructive feedback"}}

}}

Example of a concluding response:

{{

"action": "finish",

"args": {{"summary": "Great work today! We've covered the basics of feedback."}}

}}

"""

full_prompt = [{"role": "system", "content": system_prompt}]

full_prompt.extend(self.memory)

# Add the goal as the first user message if memory is empty

if not self.memory:

full_prompt.append({"role": "user", "content": f"My leadership goal is: {self.goal}"})

return full_promptStep 3: Implement the Mentor Agent Loop

Now the agent comes to life. This isn’t just a one-off prompt and response. It’s an interactive, iterative session, guided by the agent loop we described earlier:

Prompt → LLM → Response → Action → Feedback → Completed?

But this time, the loop is tailored for mentorship. Each turn, the agent:

Revisits the user’s leadership goal

Decides whether to clarify, teach, or evaluate based on the current context

Interacts directly with the user through the console, making the session feel personal

Captures feedback after each action to inform its next decision

Concludes with a final summary once the goal has been addressed or the session is complete

This structure gives the agent just enough autonomy to feel thoughtful, while keeping it grounded in the mentoring role you defined.

Here’s the full loop that powers the experience:

def run(self):

"""

The main agent loop for the mentoring session.

"""

print(f"🎯 Mentor Agent activated. Goal: {self.goal}\n")

loop_count = 0

while loop_count < self.max_loops:

loop_count += 1

print(f"--- Interaction {loop_count}/{self.max_loops} ---")

# 1. Construct the prompt

prompt = self._construct_prompt()

# 2. Query the LLM for the next action

print("🤔 Mentor is thinking...")

try:

response = completion(

model="gpt-4o", # Using a more capable model for nuanced tasks

messages=prompt,

temperature=0.7,

max_tokens=512

)

response_content = response.choices[0].message.content.strip()

except Exception as e:

print(f"An error occurred with the LLM call: {e}")

break

self.memory.append({"role": "assistant", "content": response_content})

# 3. Parse the LLM's response

try:

parsed_response = json.loads(response_content)

print(f"✅ Mentor's decided action: {parsed_response['action']}")

except (json.JSONDecodeError, KeyError):

print(f"🚨 Error: Invalid JSON from LLM: {response_content}")

feedback = "Invalid response format. Please use the 'action' key in a JSON object."

self.memory.append({"role": "system", "content": f"Feedback: {feedback}"})

continue

# 4. Execute the chosen action

action_name = parsed_response.get("action")

action_args = parsed_response.get("args", {})

if action_name in self.tools:

tool_function = self.tools[action_name]["function"]

try:

result = tool_function(**action_args)

# 5. Capture feedback for the next loop

feedback = f"The user's response was: '{result}'"

print(f"🔧 Feedback captured.\n")

self.memory.append({"role": "system", "content": f"Feedback: {feedback}"})

# 6. Decide to continue or end

if action_name == "finish":

print("\n🏁 Mentor session has ended.")

break

except Exception as e:

print(f"Error executing tool '{action_name}': {e}")

break

else:

feedback = f"Unknown tool '{action_name}'. Please choose from available tools."

self.memory.append({"role": "system", "content": f"Feedback: {feedback}"})

if loop_count >= self.max_loops:

print("\n⚠️ Mentor reached maximum interactions. Ending session.")Step 4: Start the Mentoring Session

Once everything is in place, just run the script to launch your mentoring session:

if __name__ == "__main__":

try:

user_goal = input("Welcome! What leadership goal would you like to work on today?\nYou: ")

if user_goal:

agent = LeadershipMentorAgent(goal=user_goal)

agent.run()

else:

print("No goal provided. Exiting.")

except KeyboardInterrupt:

print("\nProcess interrupted by user. Exiting.")The agent will greet you, ask for your leadership goal, and guide you through a structured session. It will clarify your intent, teach relevant concepts, evaluate your understanding, and help you plan one concrete next step.

You can go through multiple rounds until your goal is addressed or the session ends naturally.

You can view the full AI agent code on GitHub:

→ github.com/rafapaez/leadership-mentor-agent

Step 5: See It in Action

Now that everything’s wired up, here’s how the agent performs in a real session. This sample shows how it clarifies your goal, teaches key concepts, and responds dynamically based on your input:

Welcome! What leadership goal would you like to work on today?

You: time management

🎯 Mentor Agent activated. Goal: time management

--- Interaction 1/10 ---

🤔 Mentor is thinking...

✅ Mentor's decided action: clarify

🤖 Mentor: Can you describe a specific time management challenge you are facing in your leadership role?

You: to many interruptions

🔧 Feedback captured.

--- Interaction 2/10 ---

🤔 Mentor is thinking...

✅ Mentor's decided action: teach

🤖 Mentor: Let's talk about 'The concept of time blocking, a method where you allocate specific chunks of time for different activities, and set boundaries to minimize interruptions.'. A key aspect of this is...

It's important for a leader to understand this principle to guide their team effectively.

🔧 Feedback captured.

--- Interaction 3/10 ---

🤔 Mentor is thinking...

✅ Mentor's decided action: evaluate

🤖 Mentor: How would you use time blocking to manage interruptions during your day?

You: I'll start blocking focus time slots

🔧 Feedback captured.

--- Interaction 4/10 ---

🤔 Mentor is thinking...

✅ Mentor's decided action: teach

🤖 Mentor: Let's talk about 'The concept of setting boundaries and communicating your schedule to your team or colleagues, which involves letting them know about your focus times and the best times to approach you for discussions or questions.'. A key aspect of this is...

It's important for a leader to understand this principle to guide their team effectively.

🔧 Feedback captured.

--- Interaction 5/10 ---

🤔 Mentor is thinking...

✅ Mentor's decided action: evaluate

🤖 Mentor: How will you communicate your focus times to your team to help manage interruptions?

You: I'll be transparent with my availability

🔧 Feedback captured.

--- Interaction 6/10 ---

🤔 Mentor is thinking...

✅ Mentor's decided action: finish

🤖 Mentor: Great progress! By using time blocking and communicating your focus times transparently, you can effectively manage interruptions. Keep implementing these strategies to improve your time management.

🔧 Feedback captured.

🏁 Mentor session has ended.You can continue the session for multiple rounds until the agent decides the goal has been addressed, or you stop it manually.

Final Thoughts: Stop Reading, Start Building

Reading about agents is useful. Building one changes how you think.

You’ve now seen a real agent loop. Not a toy, not a slide deck example. Just a clear goal, a handful of tools, and a bit of logic to let the model lead with purpose.

The Leadership Mentor Agent isn’t perfect. But it’s real. It clarifies, teaches, and adapts. You can fork it, remix it, and ship something better this afternoon.

This isn’t about theory. It’s about practice. Stop scrolling. Open your editor. Build your agent.

Not just to write code, but to build something that collaborates. Guides. Mentors.

That future isn’t far off. You just started it.

Thanks for reading The Engineering Leader. 🙏

If you enjoyed this issue, tap the ❤️, share it with someone who'd appreciate it, and subscribe to stay in the loop for future editions.

👋 Let’s keep in touch. Connect with me on LinkedIn.

I am working with an internal team at my company right now to build out some AI Agents. I am not doing the building, but gathering the requirements with an empathic ear. This is really helpful Rafa to understand the mechanics behind it all.

This was super insightful, Rafa. Loved the practical, minimal example for easy reference. Saving this and looking forward to any future, similar articles you might have!